A data science team’s process is a key driver to their projects’ success. However, as will be discussed below, there is not an existing AI / data science maturity model that is focused on how to evaluate (and improve) a team’s process.

Hence, after reviewing several Analytics / Data Science maturity models, as well as key attributes of the data science team process, at the end of this blog, I provide 5 key questions a team can use to help evaluate their data science process effectiveness and maturity.

Feel free to jump to the end, to see those 5 key questions, or read on to get the full story.

Why Evaluate and Improve a Team’s Analytics / Data Science Process?

Data Science projects face unique challenges and are increasingly a “team sport”. Hence, it’s not surprising that a team’s process and culture is the key challenge in an organization being able to leverage AI / predictive analytics. For example, as reported by New Vantage, 92% of big data and AI executives report culture / process as the key challenge for leveraging AI / predictive analytics.

In short, since data science projects are different from software development projects, applying a software process framework or simply “winging it” doesn’t deliver sustainable value. To deliver higher value insights in less time and effort, teams need to implement a repeatable process that is effective for analytics / data science projects.

To summarize some of the key reasons to improve a team’s data science process:

- BETTER and FASTER insights will be generated during the project

- There will be improved team PRODUCTIVITY

- The team will explore the MOST PROMISING opportunities

- More, generally, there will be enhanced COLLABORATION within the team as well as with extended team members (such as stakeholders)

- Due to these improvements, the team’s MORALE will also typically improve

Exploring a Typical Analytics / Data Science Maturity Model

Analytics and Data Science Maturity Models typically focus on if/how the organization can leverage data (e.g., is there a data driven culture, is the appropriate data available). In other words, this data science maturity is a measure of how well an organization is able to collect, analyze, and consume data as well as generate useful predictive models for making decisions across the organization.

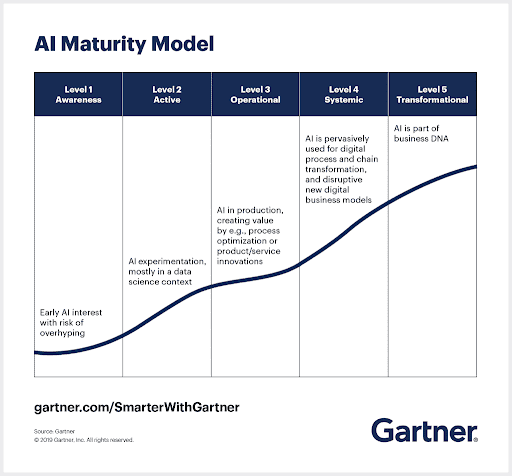

Gartner’s Data Science Maturity Model

Perhaps the most common Data Science Maturity Model, Gartner’s AI maturity model that segments companies into five levels of maturity regarding an organization’s potential to use predictive models:

- Awareness (Level 1) – The organization knows about AI but hasn’t used it yet.

- Active (Level 2) – The organization is leveraging AI via prototypes and proof-of-concepts.

- Operational (Level 3) – The organization has adopted machine learning within their day-to-day tasks, with a data science team (ex. data scientists, ML engineers).

- Systemic (Level 4) – The organization uses machine learning in innovative ways to improve their business and more broadly, disrupt business models.

- Transformational (Level 5) – The organization is using data science / machine learning pervasively. Machine learning is the value offering towards their customers.

Gartner’s Analytics Maturity Model

Ten years ago, Gartner proposed a simpler Analytics Maturity Model, which focused on increasing analytics organizational capabilities:

- Descriptive Analytics: What happened?

- Diagnostic Analytics: Why did it happen?

- Predictive Analytics: What will happen?

- Prescriptive Analytics: How can we make it happen?

Domino’s Data Science Maturity Model

Similar to Gartner’s AI maturity model, Domino has also shared a framework called the Data Science Maturity Model (DSMM), which has four levels:

- Ad-hoc Exploration (level 1)

- Repeating, but limiting (level 2)

- Defined and Controlled (level 3)

- Optimized and Automated (level 4)

The analytics maturity model is much simpler than Gartner’s AI maturity model (or Domino’s data science maturity model). However, all of these maturity models focus on an organization’s ability to leverage advanced analytics and machine learning models.

In other words, these capability models explore specific aspects of the data science / Analytics project, such as:

- Infrastructure (ex. technical architecture, platforms, and tools)

- Data Management (ex. support for collecting and cleaning the data)

- Analytics (ex. speed, agility, automation, and in general, how data is leveraged)

- Governance (ex. who is championing / prioritizing work)

- Breadth and depth of impact (ex. how data driven predictive insights are leveraged)

Key Challenges when Using an Analytics / Data Science Maturity Model

As you can see, these Analytics / Data Science Maturity Models are useful for understanding an organization’s overall maturity with respect to using AI and predictive models. However, they are not as useful for evaluating a data science team’s process.

Certainly, a key aspect of an organization being able to increase their ability to leverage analytics / data science (i.e., machine learning predictive models) is the maturity of the process the organization uses to execute data science projects. In other words, in order for an organization to better leverage AI / predictive models, it is important for the organization to use an effective data science team process.

However, these Analytics / Data Science maturity models do not go into enough depth to be useful to actually evaluate the data science team’s process.

How to Evaluate a Team’s Analytics / Data Science Process?

With this in mind, to help evaluate a team’s data science process, below are five key process components that are important to evaluate:

- Life Cycle Usage – Using a shared mental model of the steps in an ML/Data Science project

- Agile Coordination – Prioritizing potential tasks and delivering incremental results

- Cross Team Process Coordination – Coordinating across a team (ex. with other teams)

- Metrics – Measuring success (and ensuring everyone understands ‘what is success’)

- Ongoing Process Improvement – Evaluating and improving the process

The first two (life cycle and agile coordination) are specific to data science. Hence, they are described below. Since the other concepts apply to most types of projects, they are not described in more depth.

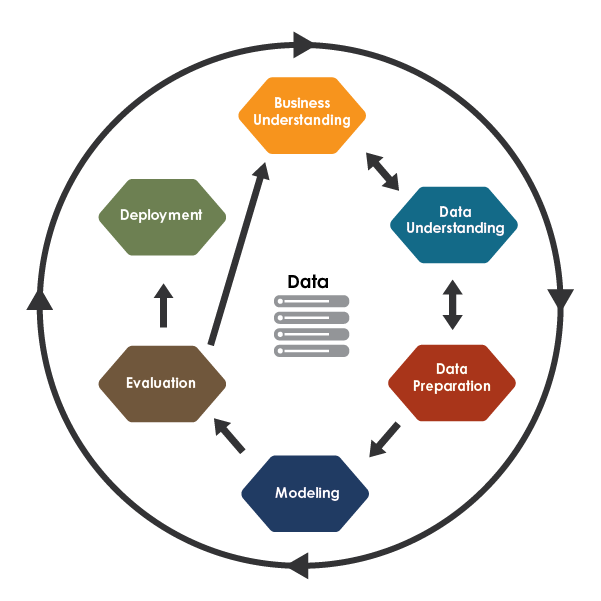

What is a Data Science Life Cycle?

A data science life cycle is an iterative set of steps a team executes to deliver a model. While different projects might have different life cycles, most data science projects tend to flow through the same general life cycle of data science steps.

CRISP-DM is the most popular framework for executing data science projects. It provides a natural description of a data science life cycle. As shown in the CRISP-DM phase diagram, the phases start with business understanding and end with deployment. However, this task-focused approach for executing projects fails to address team and communication issues.

Benefits of Using a Life Cycle

- Enables the extended team (ex. stakeholders) to understand: The work required to build & deploy a model and the steps within an ML/Data Science project

- Ensures all aspects of a project are considered, such as: Model Quality (ex. Model validation, Bias) and MLOps (ex. ML Operational support)

Why Teams Need More Than “Just a Life Cycle”

However, a life cycle is not enough. This task-focused approach for executing projects fails to address team and communication issues. Thus, it is important to combine a life cycle with a team coordination framework. In short, data science teams that combine a loose implementation of CRISP-DM with a team-based agile project management framework will see the best results.

What is Agile Coordination?

Agile data science is a set of principles that encourages teams to:

- Work on small, incremental deliverables

- Share and evaluate the incremental results with stakeholders

- Reprioritize project plans based on knowledge from previous increments

Scrum is the most popular agile framework for software development. Scrum defines values, roles, artifacts, and meetings. However, Data Science is not software development, so different agile approaches are often appropriate.

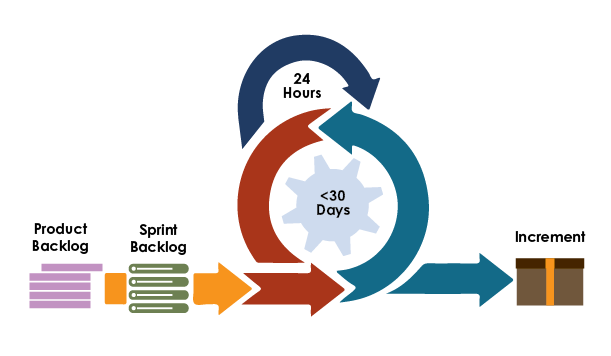

In Scrum, the product owner constantly updates a wish list of potential product features / questions to answer. The development team works to deliver top-priority items in short, iterative fixed-length time periods called sprints. At the end of each sprint, the team demonstrates the results at the sprint review meeting. At the end of the sprint, the team also conducts a retrospective meeting to improve the team’s process.

Benefits of Agile Coordination

- Enables the data scientists to have a prioritized list of “Questions to Answer”

- Ensures stakeholders understand and agree on the prioritized list of “Questions to Answer”

- Encourages small iterations, so teams “fail fast” as a normal part of the project

- Provides a mechanism for stakeholders to give feedback after each iteration

- Ensures the extended team understands the project goals and timelines

Five Questions to Help Evaluate a Team’s Process

Understand a Team’s Analytics / Data Science Process Maturity

As noted above, there is not an appropriate analytics / data science maturity model that is helpful to evaluate a data science team’s process. So, the following questions (and associated best practices) can be useful to help a team evaluate its process.

1. Life Cycle Usage

Q: Does the team have a fully defined life cycle that is used by all team members and stakeholders?

Life Cycle Best Practices:

- Shared Mental Model Defined & Used

- Life Cycle covers quality (ex. Validation, Bias)

- Life Cycle includes operations (ex. MLOps)

- Life Cycle is understood by the extended team (ex. stakeholders)

2. Agile Coordination

Q: Does the team deliver value incrementally, and get feedback from stakeholders on each iteration?

Agile Coordination Best Practices:

- Prioritized list of “Questions to Answer” (each question is “small”)

- Stakeholders help prioritize iterations (tasks / questions to answer)

- Each iteration has analysis & feedback and helps reduce project ambiguities

- Project goals and timelines are understood by stakeholders

3. Integrated Team Process Coordination

Q: Does the team have an integrated team process?

Team Integration Best Practices:

- The team has integrated the life cycle and coordination frameworks into one overall team process

- The Data Science team has a defined process to work with other teams (ex. software dev)

4. Team Metrics

Q: Does the team have defined metrics to measure project success?

Metric Best Practices:

- Defined project / business metrics so everyone understands what is a project ‘success’.

- Tracked metrics on team efficiency and effectiveness (e.g., percent of time working on top project).

5. Process Improvement

Q: Does the team have an effective ongoing process improvement effort?

Process Improvement Best Practices:

- Regular process reviews with tracked action items for process improvement

- Regular reviews with tracked action items for team tools

Learn More about Using an Analytics / Data Science Maturity Model

- Read more about the key aspects of a data science process

- Explore Agile Data Science

- Review the overall Data Science Life Cycle