What Is the KDD Process?

Dating back to 1989, the namesake Knowledge Discovery in Database (KDD) represents the overall process of collecting data and methodically refining it.

The KDD Process is a classic data science life cycle that aspires to purge the ‘noise’ (useless, tangential outliers) while establishing a phased approach to derive patterns and trends that add important knowledge.

What is the Data Mining Process?

The term “data mining” is often used interchangeably with KDD. The term confusion is understandable, but “Knowledge Discovery of Databases” is meant to encompass the overall process of discovering useful knowledge from data. Meanwhile “data mining” refers to the fourth step in the KDD process. This is commonly thought of the “core step” which applies algorithms to extract patterns from the data. It parallels the “modeling” phase of other data science processes.

In short, the KDD Process represents the full process and Data Mining is a step in that process.

Why KDD and Data Mining?

In an increasingly data-driven world, there would seem to never be such a thing as too much data. However, data is only valuable when you can parse, sort, and sift through it in order to extrapolate the actual value.

Most industries collect massive volumes of data, but without a filtering mechanism that graphs, charts, and trends data models, pure data itself has little use.

However, the sheer volume of data and the speed with which it is collected makes sifting through it challenging. Thus, it has become economically and scientifically necessary to scale up our analysis capability to handle the vast amount of data that we now obtain.

Since computers have allowed humans to collect more data than we can process, we naturally turn to computational techniques to help us extract meaningful patterns and structures from vast amounts of data.

What is KDDS?

In 2016, Nancy Grady of SAIC, published the Knowledge Discovery in Data Science (KDDS) describing it “as an end-to-end process model from mission needs planning to the delivery of value”, KDDS specifically expands upon KDD and CRISP-DM to address big data problems. It also provides some additional integration with management processes. KDDS defines four distinct phases: assess, architect, build, and improve and five process stages: plan, collect, curate, analyze, and act.

KDDS can be a useful expansion for big data teams. However, KDDS only addresses some of the shortcomings of CRISP-DM. For example, it is not clear how a team should iterate when using KDDS. In addition, its combination of phases and processes is less straight-forward. Adoption of KDDS outside of SAIC is not known.

What are the KDD Process Steps?

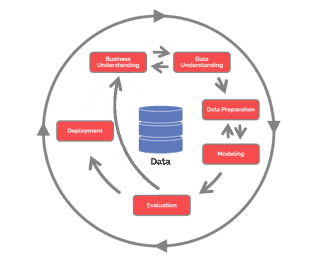

KDD often draws differing interpretations of how many distinct steps are involved in its process. While KDD variants can range from 5 to 7 steps, many regard KDD as the following 5-steps process:

- Selection: Acting upon a database of compiled data the targeted data is determined, and variables that will be used to evaluate for knowledge discovery are determined.

- Pre-processing: This stage is all about improving the data being worked with and incorporates the concept of data cleaning. Predictive models for unreliable data are established in order to predict similarly faulty, missing, attributional mismatched data, then working it out of future processes.

- Transformation: This phase concentrates on converting the pre-processed data to the fully utilizable kind. This is done by narrowing the scope in terms of variety and data attributes are firmly established for forthcoming evaluation. Here the information is organized and sorted, often unified into a single type.

- Data Mining: As the most known aspect of the process, the data mining state is focused on sifting through the transformed data to seek out patterns of interest. These patterns are graphed, trended, and charted in the form particularly helpful to the process the KDD is being conducted for. The method incorporated in this phase involves grouping, clustering, and regression, with the chosen one (or more) dependent on the outcome expected and desired from the process.

- Interpretation/Evaluation: The final phase is one during which the data is handed off for interpretation and documentation. At this point, the data has been cleaned, converted, picked apart based on relevant attributes, and framed into visual representations to help humans better evaluate the curated output.

Don’t Miss Out on the Latest

Sign up for the Data Science Project Manager’s Tips to learn 4 differentiating factors to better manage data science projects. Plus, you’ll get monthly updates on the latest articles, research, and offers.

Pros and Cons of the KDD Process

KDD is an immensely helpful tool in helping businesses and industries stay current with customer needs, behaviors, and actions. There are some clear advantages to using the KDD methodology, as well as some challenges in its usage.

A few KDD Use Cases:

- Market Forecasting: Businesses need to be able to sell their products to customers, and in order to sell products (or services) they must know what customers will buy. KDD helps to work out the predictive nature of consumer trends, identifying where the product focus should lie, and assists in predicting what other types of products consumers will want. This helps businesses gain a competitive edge over others in their field.

- Iterative Process: The KDD process is iterative, meaning that the knowledge acquired is cycled back into the process, enhancing its efficacy. In that way, the data is better refined at each stage by using formally acquired and previously unknown information (knowledge). This creates a loop that continues after the implementation of the final result feeding right back into the establishment of objectives. However, any part of the process contributes to knowledge-gathering, so lessons learned at any stage of KDD can be fed back to the start of the cycle.

- Anomaly Identification: The more we know about holes in a process, or security vulnerabilities, the better we can guard against them, utilizing their knowledge to bolster our process efficiency and security, helping future utilization development.

Cons of the KDD Process:

- Outdated: The process doesn’t address a lot of the modern realities of data science projects such as the setup of big data architecture, considerations of ethics, or the various roles in a data science team.

- Expensive: Storing massive, ever-developing volumes of data carries an obvious upfront cost. After all, before the data can be evaluated, learned from, and refined, it needs to be stored somewhere. Depending on the type of data collected, there might be a high cost associated with compiling it. Space and maintenance of the data, even before any learning work can come at a high cost.

- Security Vulnerability: In order to learn about customer trends, businesses need to know as much as they can about their customers. That means that data collection needs to be securely stored. But securing that much data can only stand up to so many attempts by nefarious actors to try to get to it. While the KDD process works on the data, assurances (often complex and expensive ones) must be taken to ensure that the data is not hacked, stolen, or compromised.

- Privacy: The user privacy issue is a huge obstacle to overcome. Customers only want so much out themselves, but in order to acquire more knowledge, businesses have to collect as much data as possible. Many companies’ legal terms forbid them from collecting certain information, something that is limiting to their process, so they often try to discreetly do so anyway, violating user privacy. Often the collected data is leaked or stolen, creating an even bigger calamity.

- Takes Time: As with any learning, even of the automated variety, it should not be surprising the KDD process is never really over. As more knowledge is acquired and applied back to bolster the process’s next iteration, more additional data has been collected that requires sifting through, meaning that the process is sure to take additional time.

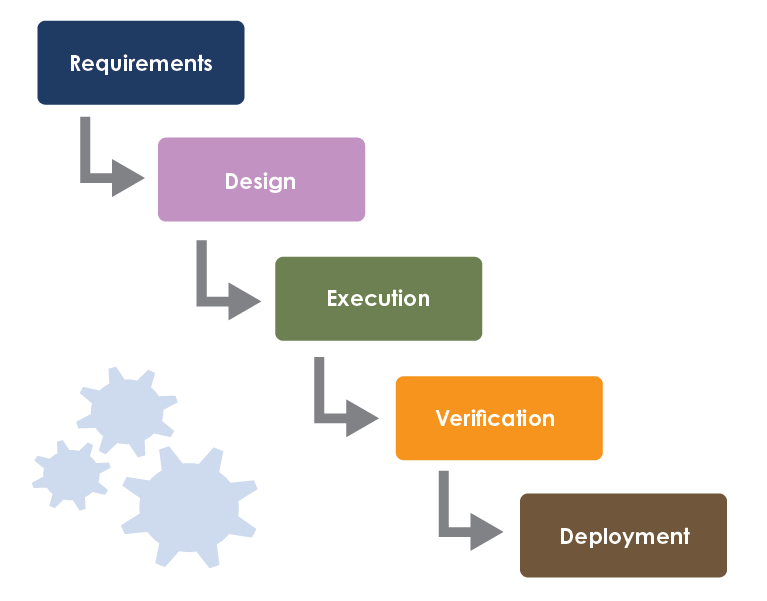

- Waterfall: While the step-by-step phased process can be used as an iterative process, it may also lead teams to fall into the rigid and sluggish shortcomings of Waterfall. For best results, combine the KDD Process with more modern Agile Processes limit responsiveness to the ever-shifting project needs.

Learn More

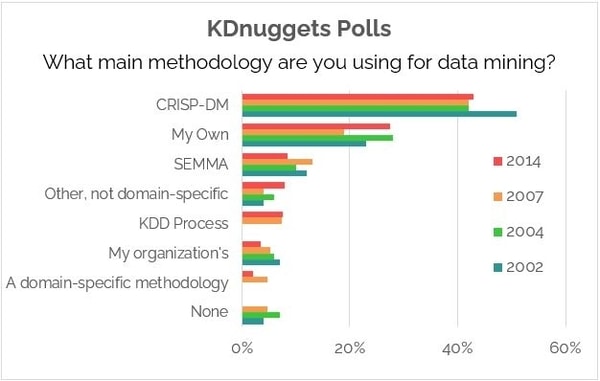

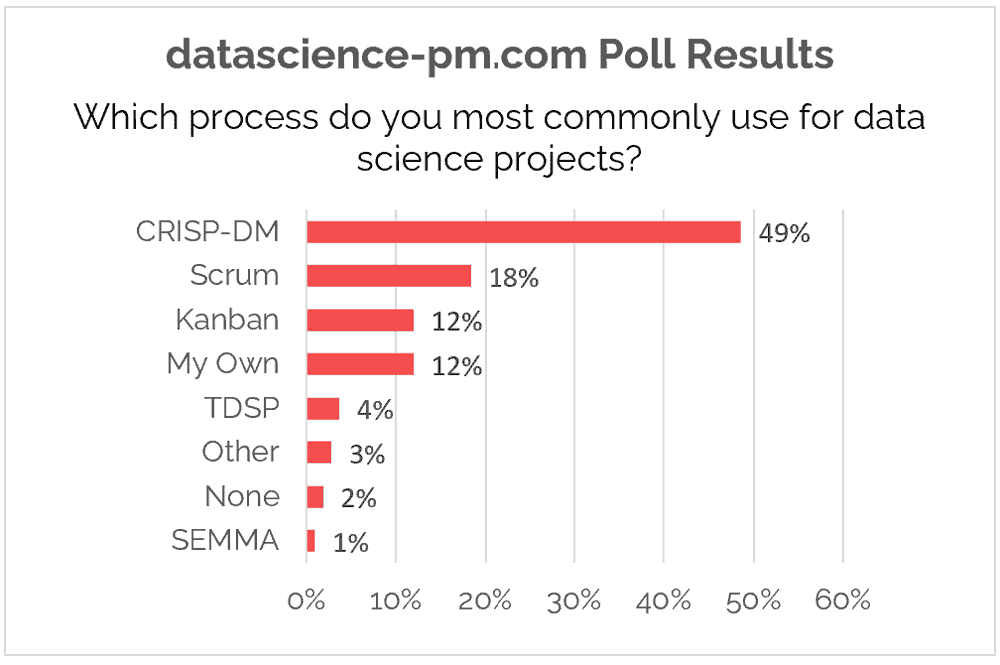

Similar Approaches: There are several data science methodologies that are in the same family of traditional data mining approaches. Two other common ones are:

- SEMMA: A specific KDD approach with 5 sequential phases developed by IBM.

- CRISP-DM: A 6-phased sequential approach that is the most comprehensive of the waterfall-inspired data science approaches. Still today, it is the most popular approach for data science projects.

But to cater to the ever-shifting realities of team-based data science projects, you should consider more modern approaches to managing your data science process

Curious? Read our White Paper

Learn the five unique challenges of data science projects and how to overcome them.

Get a grasp on CRISP-DM, Scrum, and Data Driven Scrum.

And understand how to leverage best practices to deliver data science outcomes.